The Power Circle: A Human-Centered Approach to AI Strategy with Design Thinking

Because AI won’t make a difference until it clicks, for your team, your system, and the people you serve.

Last month, LinkedIn named AI literacy the #1 rising skill in the world.

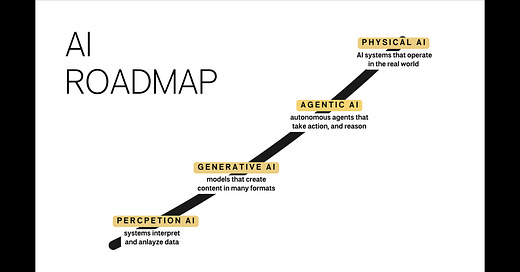

A few days later, NVIDIA CEO Jensen Huang opened GTC by outlining the road to Agentic AI. And this week, OpenAI announced $40 billion in new funding to scale tools already used by over 500 million people each week, tools explicitly designed to move us closer to Artificial General Intelligence (AGI).

“AGI refers to highly autonomous systems that outperform humans at most economically valuable work.”

— OpenAI Charter

This moment isn’t just about smarter tools.

AGI represents a shift, from assistant to collaborator, from automation to autonomy.

It’s when AI stops following instructions and starts shaping outcomes.

And while most people are still asking what can AI do?

AGI wants you to ask something far more powerful. Because it doesn’t just raise the bar for what’s possible. It raises the bar for what we dare to imagine. It forces a new kind of ambition, not just in what we create, but in who we become in the process.

The Experts Are Sounding the Alarm

We’re closer to AGI than most people think, and some of the people building it are openly saying so.

Kevin Roose, NYT tech columnist, recently wrote:

I believe that most people and institutions are totally unprepared for the A.I. systems that exist today, let alone more powerful ones, and that there is no realistic plan at any level of government to mitigate the risks or capture the benefits of these systems.

(The New York Times, March 2024)

Dario Amodei, CEO of Anthropic (creator of Claude), said:

It is my guess that by 2026 or 2027, we will have A.I, systems that are broadly better than all humans at almost all things.

(Davos, January 2025)

In the industry there is debate over what it means to achieve “AGI” and as Kevin Roose shared in his article:

I believe that when A.G.I. is announced, there will be debates over definitions and arguments about whether or not it counts as “real” A.G.I., but that these mostly won’t matter, because the broader point — that we are losing our monopoly on human-level intelligence, and transitioning to a world with very powerful A.I. systems in it — will be true.

I often share how my “AGI” moment was back in September 2024 when OpenAI announced their first reasoning model. When asked how it was trained to do such complex tasks, they replied with this statement.

As an educator and design thinking practitioner, that shook me to my core, because that’s exactly what we try to teach humans to do. And you realize, we’re living in a time where we train machines to think more deeply, while giving people less and less time to do the same. That’s the tension I explore in my book, Designing Schools. Because if we’re designing AI to think more deeply, but not creating space for people to do the same, what kind of future are we actually building?

We’re Not Ready

While AGI is still emerging, how we prepare for it now will define what’s possible later.

This isn’t a future conversation.

It’s a now conversation.

And most systems, especially in education, are nowhere near ready.

The stakes have never been higher. The pace has never been faster.

But speed without strategy leads to misalignment, not momentum.

In the age of AI, as leaders you aren’t just asked to make good decisions.

You are expected to make fast ones, with limited context, changing tools, and systems that weren’t built for this.

There’s no time for five-year plans.

Sometimes, there’s barely time for five-day ones.

And still, the pressure builds:

“Do something with AI.”

But while the world races toward AI implementation, most are quietly asking:

What does AI literacy actually mean in our context?

How do we move forward without falling into hype or fear?

What real problem are we solving, beyond just using the tools?

That’s why we created The AI Power Circle.

Not to chase trends.

Not to teach tools.

But to create the kind of clarity that makes AI click, with strategy, empathy, and design thinking at the core. That clarity doesn’t come from a prompt or a platform.

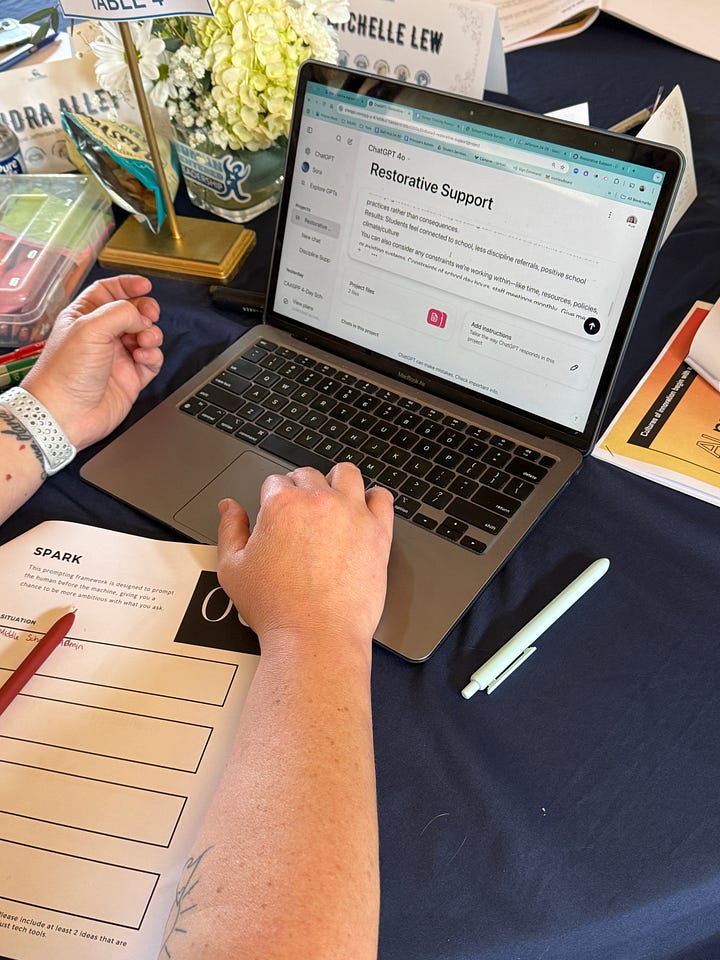

It comes from pausing long enough to ask better questions, together. That’s what the team at San Gabriel Valley Unified School District hosted for a group of districts last week.

Inside the Power Circle: What This Experience Actually Looks Like

Before the workshop even begins, deep preparation is essential. Because when you bring senior leaders and decision-makers into the room, you’re asking for their most valuable resource: time.

That’s why we study the data, map the user journeys, and understand the landscape. Where’s the friction? What’s already been tried? What needs to be true for this to succeed?

And then comes the real work, facilitating a conversation across teams who often have different versions of the truth. One sees it as a tech problem. Another, a comms issue. Another just wants it done, fast because “this is what the teachers are asking for.”

The Power Circle provides a structured way through that noise. We use design thinking to create space for alignment, not by guessing, but by framing real problems with real insight.

And when teams walk out of the room, they leave with something that actually moves them forward: a shared understanding of what matters, and a validated statement they can build from. This statement is called a founding hypothesis.

The Power Circle isn’t a keynote. It’s not a demo day. And it’s definitely not a crash course in how to use AI tools. It’s a strategic design sprint grounded in reflection, empathy, and problem framing. We built it for school and district teams who need to move fast, but want to lead with intention.

The goal?

Help teams define the right problem, before they invest in the wrong solution. Here are a few highlights.

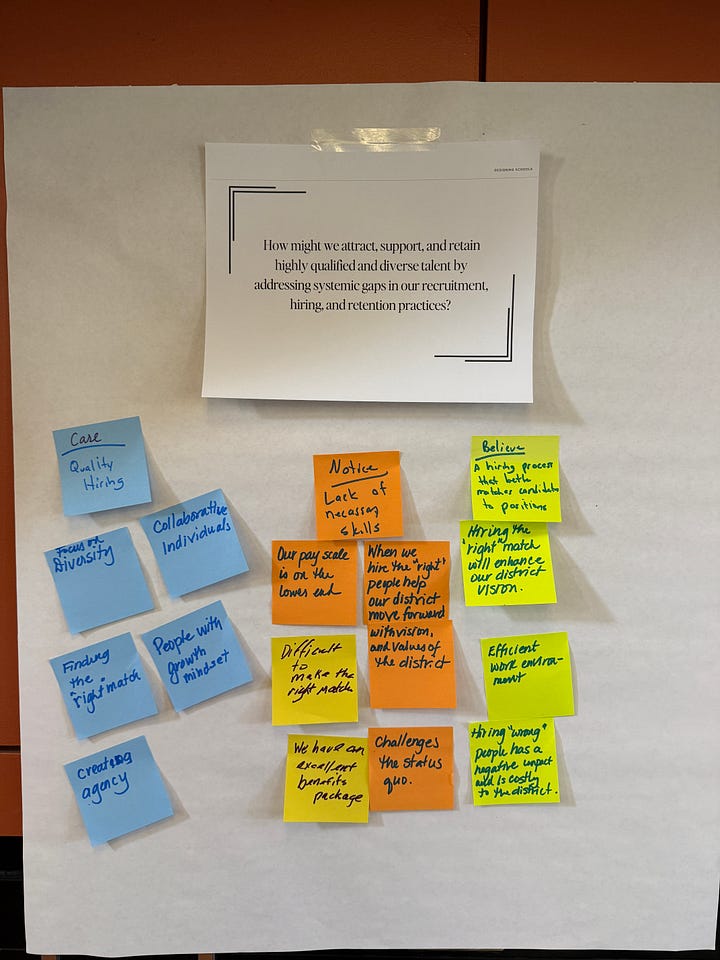

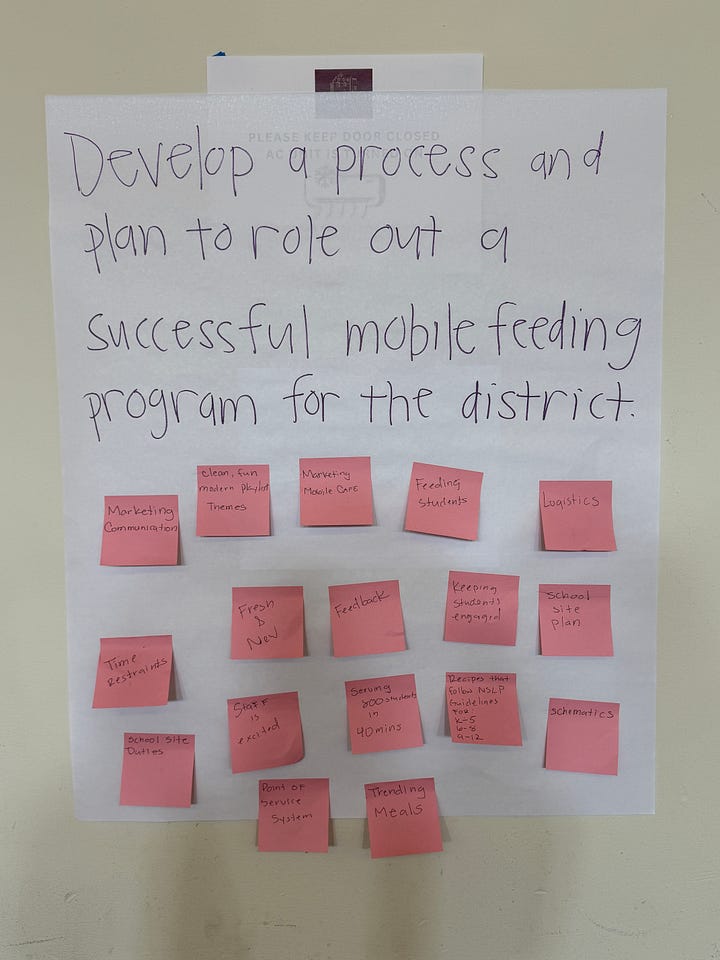

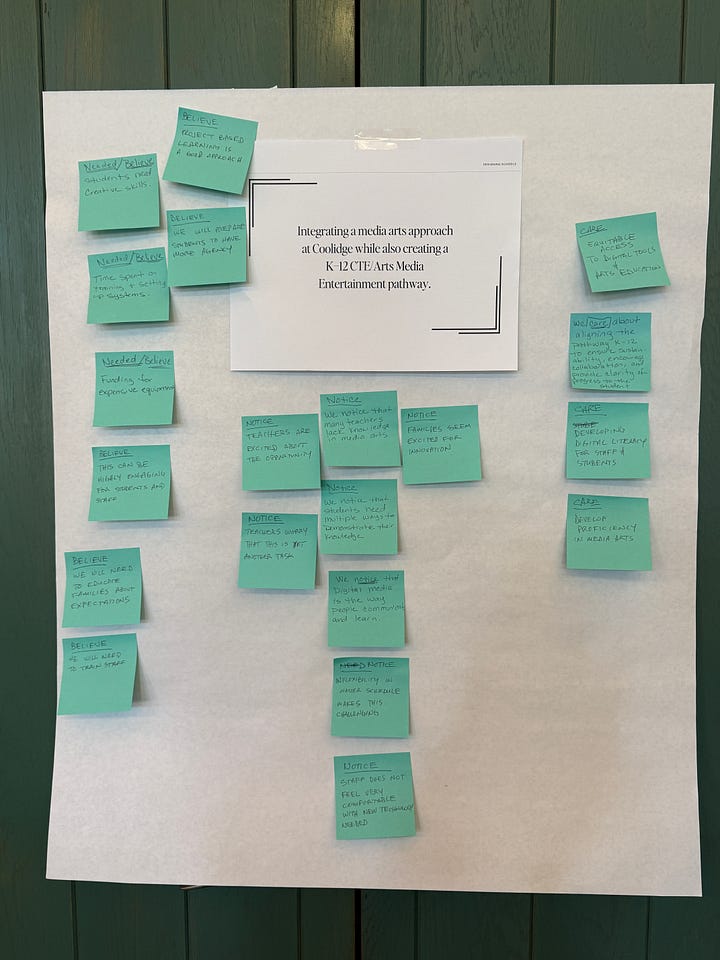

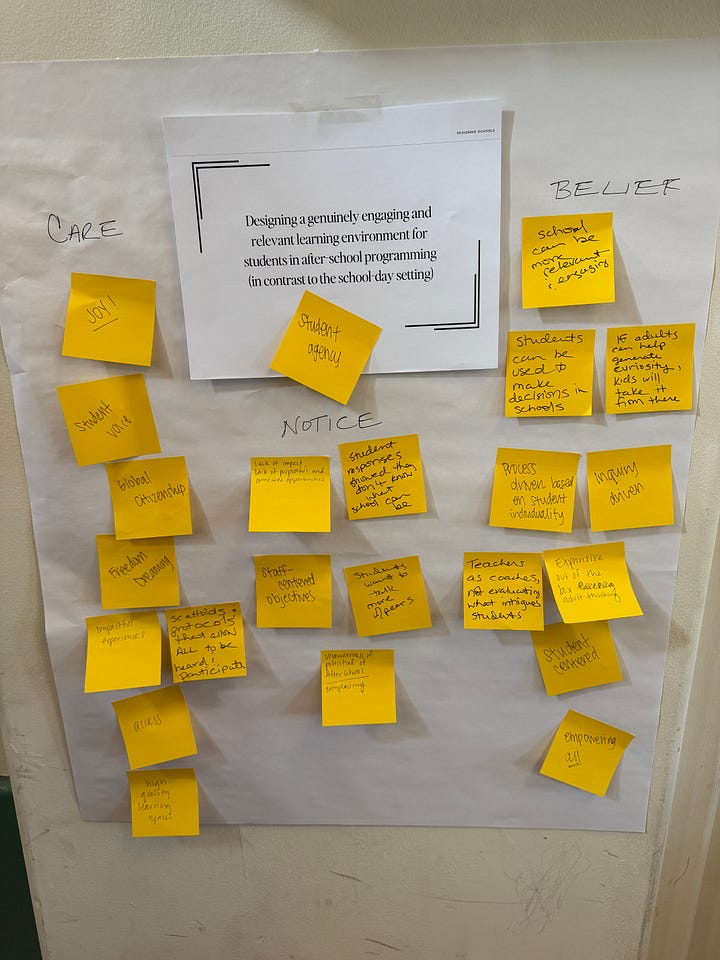

Signals and Tensions

Before we even mentioned AI, we invited teams to notice the signals already emerging in their own space, what patterns they were seeing, what tensions they were feeling. Because before we can design with AI, we have to design with awareness. And too often in these workshops, the focus rushes straight to AI. We wanted to do the opposite, start with people, patterns, and the friction already showing up in their space.

We started by helping teams surface Signals and Tensions, what they were noticing, and what was getting in the way. Then, they reflected on their Sparketype, a framework to identify the type of work that energizes them most. (Because understanding your own motivation is essential when designing with AI as a teammate.)

From there, teams shared their initial thinking using a simple frame:

What I care about.

What I’m noticing.

What I believe is needed.

Bringing in the Future

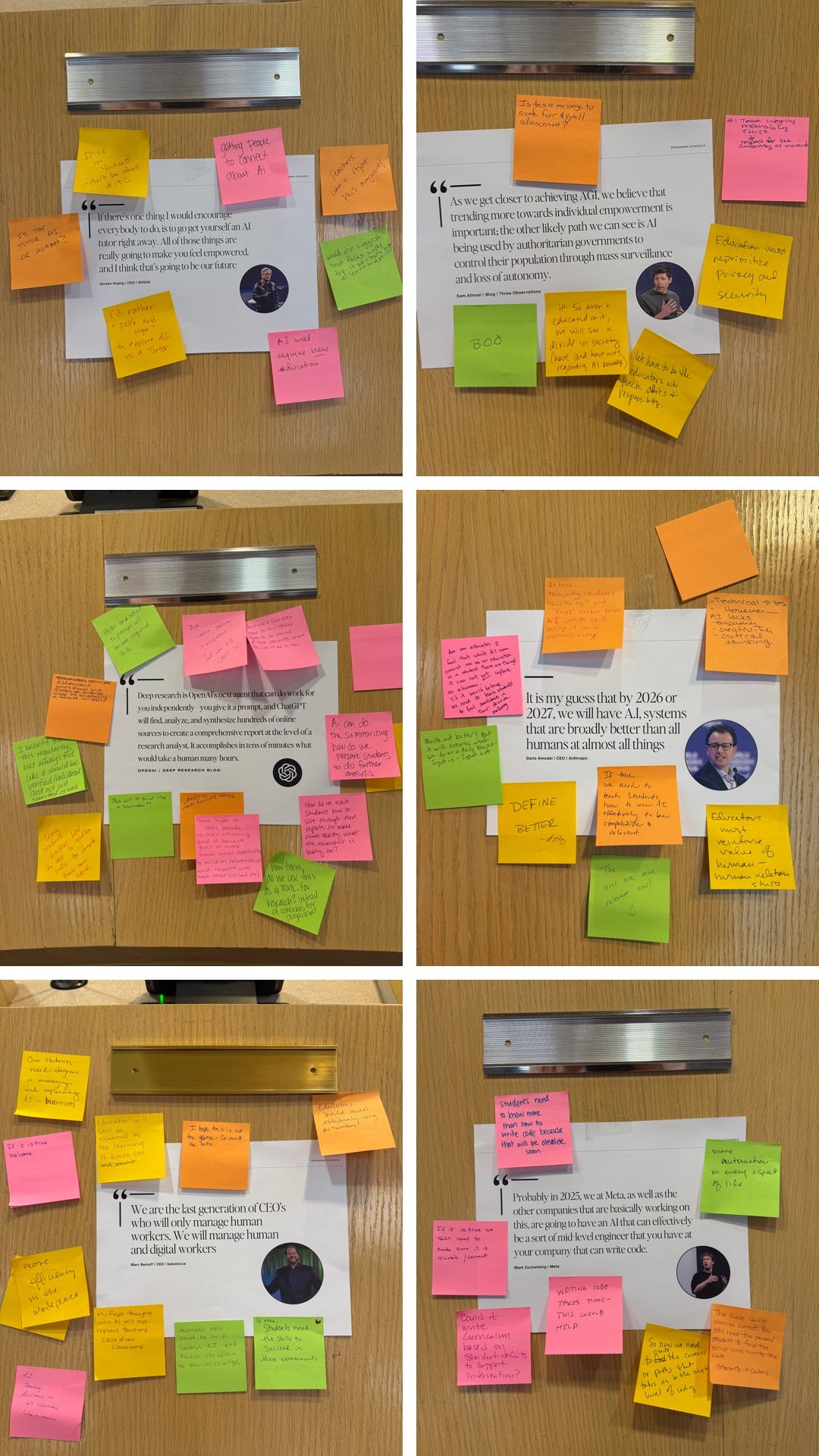

With these insights grounded in their lived experience, we expanded the lens. Not by starting with a lecture on AGI, but by inviting participants to walk through a gallery of bold, sometimes uncomfortable quotes from CEOs, technologists, and global leaders shaping the AI landscape.

We did this intentionally.

Because AGI isn’t just a technical leap, it’s an emotional one.

And the implications are too big to process all at once.

The gallery walk wasn’t about overwhelm. It was about opening—creating space to pause, think bigger, and ask:

If these things are true, what needs to change in how we teach, lead, and design?

Most people don’t have time to read every report or decode every shift. So we curated signals—not just headlines, but prompts that connect emerging trends to real decisions.

Those three sentences became the foundation of their first problem statements, and what they shared with one another. With this now we are ready to bring in emerging trends with AGI. This is critical for helping education leaders see beyond today, and prepare for tomorrow.

Rather than starting with a lecture on AGI, we invited participants to first surface their problem statements, to reflect on the friction they were already feeling in their work. Only then did we introduce a gallery walk featuring bold, sometimes uncomfortable quotes from technologists, CEOs, and global leaders about AI in 2025.

We did this intentionally.

Because AGI isn’t just a technical leap. It’s an emotional one. And the implications are too big to process all at once.

The gallery walk wasn’t about overwhelm. It was about opening. It gave participants space to think bigger, challenge assumptions, and ask:

If these things are true, what needs to change in how we teach, lead, and design?

Most people don’t have time to read every report or watch every interview. So we curated quotes and signals from the AI landscape, provocative, sometimes uncomfortable, to help teams reflect and think bigger. These weren’t just headlines. They were prompts designed to connect emerging trends with real decisions.

The results were powerful.

It’s one thing to talk about the future. It’s another to design with it in mind.

And that shift made something else possible - clarity.

From Clarity to Vision: Why a Single Statement Matters

After expanding their thinking with signals from the future, it was time to slow down again, and get specific.

Because clarity doesn’t come from hype. It comes from making meaning.

That’s the idea behind Click, the latest book from Jake Knapp and John Zeratsky, creators of the original Design Sprint. Their first book helped teams prototype faster. But Click zooms out, to the messy, foundational stage before any product exists.

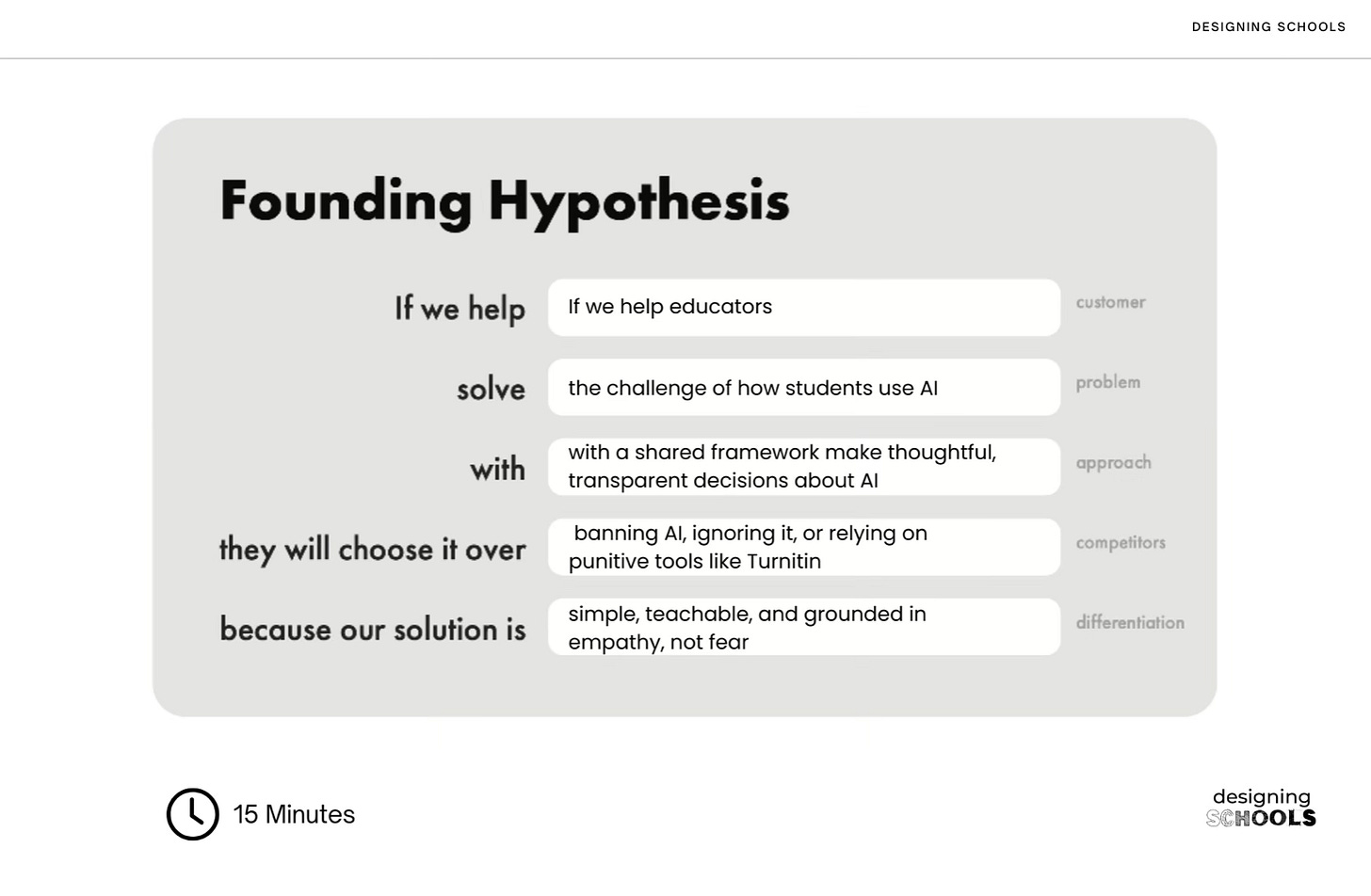

It introduces the Foundation Sprint: a method designed to help teams align on what matters before they start building. At the center of it is the Founding Hypothesis, a single, powerful statement that clarifies the problem, the people you're designing for, and what would make a solution truly resonate.

The goal of the Founding Hypothesis is to articulate a clear belief:

About the problem worth solving

About the people at the center of it

And about what would need to be true for a solution to actually work

Simon Sinek says, “Vision is the ability to talk about the future as if it were the past.” That’s what a great founding hypothesis does. It gives people a shared sense of direction, and belief that change is possible.

To help model this for our group, I shared my own Founding Hypothesis, centered on one of the biggest tensions in education today: AI and academic integrity.

Rather than default to bans or punitive tools, I believed we could help educators make thoughtful, transparent decisions using a shared framework. That belief led to the creation of the WISE Framework, a simple way to guide students in AI use through four pillars: Wellbeing, Integrity, Skills, and Engagement.

Because when a solution clicks, it doesn’t just solve a problem. It gives people language to lead. It’s a simple statement that organically emerges through a series of exercises.

Why It Feels Uncomfortable… And Why That’s Good

But here’s the truth: that kind of clarity can feel uncomfortable at first.

At one point during the session, a participant asked, “Why can’t we just use AI to do this part?” Others admitted they felt confused or unsure. And that’s the exact moment you know you’re doing it right. Because sitting in the problem space isn’t easy. It’s uncertain. It’s slow.

As facilitators, we need to be comfortable staying there, because we know what’s on the other side. That’s the power of a strong framework: it doesn’t rush you to an answer. It gives you the structure to wrestle with the right questions.

t’s a little like standing in the middle of a maze with no clear path out. The urge is to climb the walls or look for a shortcut, but instead, we ask participants to slow down, explore the corridors, and notice the patterns. With each step, they gain orientation. With each reflection, a new possibility emerges. And eventually, they see the shape of the maze, and the clarity to move forward with confidence.

That’s what this phase of the workshop was about, resisting the rush to solve, and instead building the muscle to sense, reflect, and align before we act.

That’s why we didn’t lead with AGI theory. We started with real friction, real insight, and real time to think. Because the "click" doesn’t come from understanding a technology. It comes from seeing how that technology intersects with your purpose.

And in schools, that clarity matters more than ever. When the stakes are high and change is relentless, it’s easy to react. But when leaders can define what matters most, they stop chasing tools, and start designing a future where those tools click into place.

What Happens Next

What happens when school leaders don’t just talk about AI, they pause to ask what matters?

They walk away with:

Clarity on the real problem

Empathy for who they're designing for

A shared language to guide what comes next

The Power Circle isn’t a one-off event. It's the start of a new way of leading.

Because in the age of AI, we don’t just need more tools.

We need better thinking.

If you want to bring this experience to your team, let’s talk. You can learn more here.

This is cool. I love design thinking. Would be interested in hearing more about the WISE framework that emerged.

I think it’s really important that we have the courage as well as good processes to ideate, implement, and iterate on curricular and pedagogical approaches in the next few years. We also need to think with a futures lens about system leverage points and likely impacts. AGI may be the most disruptive thing humanity has ever faced.

At a minimum, millions of jobs will likely be lost, hitting those who are least AI-proficient the hardest:

https://open.substack.com/pub/garethmanning/p/high-tech-low-literacy-how-the-ai?r=m7oj5&utm_medium=ios