Over the past year, I’ve been openly critical of many AI tools marketed to schools and educators, not because they don’t offer utility, but because of what they represent:

A pattern of shallow substitution disguised as innovation.

We’ve taken a tool-first approach to AI adoption, and it has left us innovation-poor.

We’ve rewarded platforms that generate fast answers, not better questions. We’ve optimized for individual convenience rather than collective vision. And we’ve built habits around shortcuts, 30-second hacks that go viral while the deeper systems work gets left behind.

In many ways, the very thing we worry AI will do to learning, make it superficial, transactional, and detached from human meaning, is exactly what the industry has done to itself. And it’s not because educators don’t care. It’s because we’ve been sold the illusion of support. And that illusion, over time, has come at a cost.

When I ask decision makers why they chose a particular AI tool, the answer is almost never because it aligned with a learner profile, a district vision, or a theory of change. It’s almost always because it was what someone found first. What someone asked for. It was free. It was quick. It was easy. It got them to overcome their fear of AI.

Of course, those first steps matter. Many educators had their first meaningful encounter with AI because a tool made something less overwhelming. I respect that. But we need to be honest about where compromise becomes a pattern, and where that pattern leads.

It leads to disconnected tools, disjointed workflows, and exhausted educators. It leads to platforms that tell you what to do before you’ve even had a chance to imagine what’s possible, they flatten your creativity, and above all they dim your human spark. It leads to a field that feels increasingly fragmented, not just in tools, but in purpose.

That’s why, when I decide what AI tools to recommend, I hold it to three non-negotiable criteria:

Can I grow with it?

Not just use it once, but develop deeper, more strategic practices over time.Does it inspire new ideas?

Not just speed up old habits, but invite new ways of thinking and teaching.Can it scale across my system?

Not just live on my laptop, but support collaboration, customization, and above all our vision.

It’s with these three questions in mind I believe with Google, things are different.

With Gemini, NotebookLM, and the new Google Classroom integrations, we’re finally seeing AI positioned not as a standalone solution, but as a connected system. One that lets you start small, generate a quiz, plan a lesson, write a summary, but doesn’t stop there.

When you’re ready, the next door is already open.

You can create podcast and video overviews for complex concepts.

You can co-author research with AI across languages and modalities.

You can train custom chatbots (Gems) that reflect how you think, plan, and lead.

You can build systems that support feedback, reflection, and student progress toward competencies, not just completion.

This is the kind of design that doesn’t limit your imagination. It grows with it.

I shared these updates in my last post.

Your AI Journey

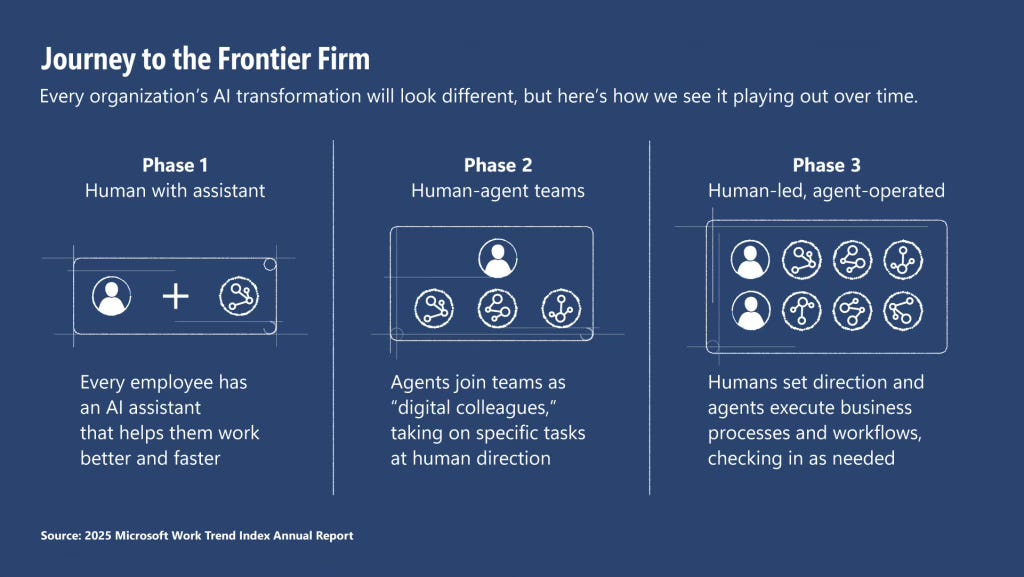

I often share how one of my favorite reports is the Microsoft Work Trend Index. And this year they shared three phases people will go through in their journey with AI.

Moving from Phase 1 to Phase 2 is about having the “right” tool as much as it is a mindset shift. It requires us to rethink collaboration, not just among people, but between people and machines. Most people in schools won’t be able to integrate tools like Make.com, Zapier, Cursor, or the many others that as individuals we can use. These tools don’t just help you accomplish a goal. They teach you a core set of skills of what it means to work in partnership with AI.

And that’s the opportunity Google is offering schools right now.

With Gemini, NotebookLM, and Classroom integrations, we’re not just being handed a new assistant. We’re being invited to build AI teammates.

→ Systems that reflect how you plan, give feedback, and communicate

→ Tools that can be trained on your values and scaled across teams

→ Learning ecosystems designed to grow with you, not just automate what already exists

In this example I share what this looked like for me with just one teammate.

This isn’t about learning a feature.

It’s about building a skillset and mindset that you can take anywhere.

If we get this right, we don’t just get better tools.

We get better systems.

We get better work.

We raise the bar for what collaboration can look like, across classrooms, across schools, and across industries.

And the ultimate group who benefits are our learners.

This Isn’t New. I’ve Always Asked These Questions

These three questions, Can I grow with it? Does it inspire new ideas? Can it scale across my team? weren’t invented for AI. They’ve always been the lens I use to evaluate whether a technology is worth investing in, sharing, or building with.

Years before generative AI entered the picture, we were already facing the same challenge: Will we use new tools to reinforce old habits, or will we use them to reimagine what’s possible?

One example I often think about is Evernote. Back when it was at its peak, tagging content in Evernote wasn’t just a way to stay organized, it was an early lesson in the art of curation. You weren’t just collecting links or notes. You were actively building a knowledge system, grouping related ideas, and creating frameworks that could grow with you. Most people, including researchers said “The pen is mightier than the keyboard,” because our failure to adapt our habits, workflows, and skills to emerging technologies has always been mediocre. Dr. Beth Holland and I shared this back in 2016 in this article for EdWeek.

Despite what the media said, when you engage in this deeply human work, students had quite a different story to share. Like these graduate students at USC in 2014 when we first introduced iPads and Notability.

Today, that same concept is being reintroduced in NotebookLM, where educators can tag sources, synthesize research, and generate custom overviews from curated inputs. It’s a powerful reminder that some of the best “new” ideas in AI aren’t new at all, they’re long-standing practices finally given room to scale.

Another example is in how we think about assessment.

When I first started teaching, I leaned heavily on Grant Wiggins and Jay McTighe’s backward design model. The second question "How will students show me what they know?" was where the answer year after year would change. What started as posters, scantrons, and essays changed in 2012 when my principal handed me a class set of iPads to pilot.

I was hesitant. You’d never believe it knowing me now but I was one of those who thought technology was going to destroy learning.

But I’ll never forget what happened when I asked students to make a video instead of writing a paper. The depth, the personality, the critical thinking, all of it came through in ways I hadn’t seen before. It didn’t replace writing. It enhanced it. It gave them a reason to care, and a way to reflect.

That was more than a decade ago.

This is yet another example from students about how technology expanded their critical thinking, their empathy, and above all their contribution to their community. Again this is 2014. Well before ChatGPT.

Imagine where we could be now if we had treated those tools, not as novelties or replacements, but as opportunities to rethink how we design learning. If we had built from that moment instead of defaulting back to what was familiar.

The truth is, we failed at incremental innovation. We had the tools, but we didn’t build the systems. Some might say it’s too late. I absolutely disagree.

One of the hardest parts about this work has been eliminated:

You no longer have to do this alone. Not only do you have people, decades of research, but you also have AI tools who can assist with the very barriers people experienced when trying to scale these initiatives.

But we need to move fast, and with far more clarity than before.

We now have three classes of graduating students who are walking into a world drastically unprepared for what work, and life will be like. And worse, many who do not have the agency to shape what comes next.

So yes, I’m excited about what Google has given people.

Not because it checks the AI box. But because it supports the real work, clarity, coherence, and creativity in service of better systems.

Above all it helps people in my role give people an alternative to the marketed tools.

If you're ready to move from scattered tools to systems of support, this is the moment to rethink your approach. And we don’t have to do it alone.

If you want to see what this looks like, click here to watch my free webinar.

AI won’t replace us.

But it will challenge us to design with more intention than ever before.

Share this post